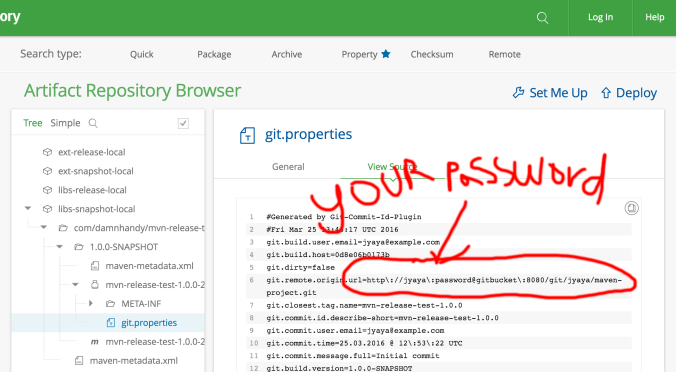

A few days ago, I noticed something very troubling after using the Maven release plugin to publish a release artifact to an internal Maven repository: my git password was exposed in the Maven build output as well as a git.properties file that the Maven Git commit ID plugin generated. These files are is now sitting in Artifactory for all to read.

Not cool. For Maven-based projects, I typically use the the Maven Release Plugin. Because we’d also like to track some of the git metadata about how the build was produced, we also use the Maven Git commit ID plugin as well, which plays quite nicely SpringBoot. So I was very disturbed to see my password all over the place.

What is happening here?

First of all, I should be clear that the project in question is using the Maven command line wrapper and pulling down Maven 3.3.9, which is the latest at the time of this writing. I’m also using Git 2.7.4 and the current release of the Maven Git commit ID plugin, which is was 2.2.0. For the most part, everything is current.

This issue here is not specific plugin any single plugin (but it looks like the Maven Release Plugin is the core offender), but rather the issues only manifest themselves in certain conditions when the group of plugins interact with one another during the release process. The Maven plugins in question are:

The combination of these plugins will expose your Git passwords when using Git over either HTTP or HTTPS when the Maven Release plugins release:prepare and release:perform plugins are invoked, but curiously not when the package,install, or deploy goals are invoked. Additionally if you’re using the Maven Git Commit ID Plugin to capture commit information in your build, the generated git.properties will contain your user name and password when using the default setting and this file will be visible in the Maven repository your artifact is published to. What appears to be happening is that the Maven release plugin in rewriting the git origin URI and including the credentials in the URI. Thus, when the git commit ID plugin goes to resolve the git.remote.origin.url value, it now includes the username and password as well.

Demonstrating the issue

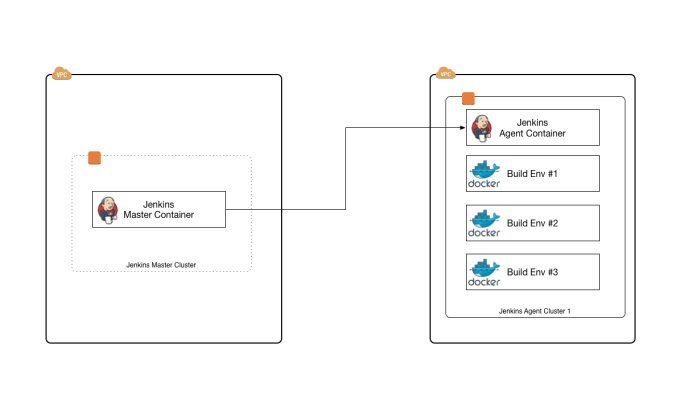

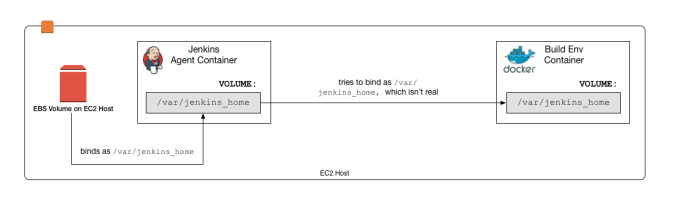

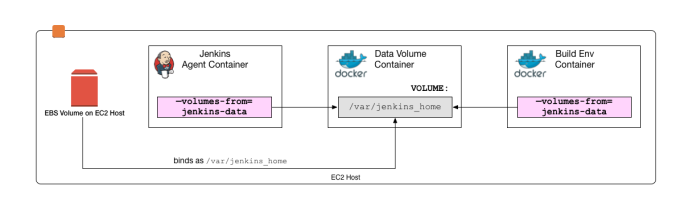

Reproducing this issue was kind of a pain in the ass, but with tools like Docker and Docker Compose, it’s a little less painless. I have created such an environment which does the following:

- Creates an instance of Artifactory OSS for our Maven repository

- Creates an instance of Gitbucket for the git repo manager

- Creates a user named John Yaya who has a username of

jyayaand a password ofpassword - Creates a container with Maven and Git which also mounts a sample Maven project to demo the issue.

The project is here:

https://github.com/damnhandy/maven-publish-issue

Check the README.md for details on how to run it, but it works fine under Docker Machine on OS X and Linux. This project will startup both Artifactory and Gitbucket and startup a “workspace” container and dump you into the container where you’ll be able to execute the Maven and git commands. The test is 100% repeatable whenever you perform a release.

How you can prevent this?

There are a few ways you can prevent your passwords from being exposed:

Use SSH instead of HTTP/HTTPS in your CI setups

SSH is doesn’t have this issue and won’t expose your passwords. SSH avoids this problem all together, unless of course you use SSH with usernames and passwords. Granted, SSH may not be appropriate in all cases. If you’re in an enterprisey environment, this may be more complicated. SSH is also kind of a pain in the ass on Windows. If SSH isn’t an option, there’s a few more options.

Use Maven Release plugin 2.4.2 or higher

By default, the super pom in Maven 3.3.9 uses version 2.3.2 of the Maven Release plugin. This is fixed as of 2.4.2, but if you don’t define the version to use, you’ll be exposed. You have to explicitly define the version of the Maven Release plugin to use:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-release-plugin</artifactId>

<version>2.5.3</version>

</plugin>

This will keep your passwords out of yours logs in Jenkins, TravisCI, or other CI environment, but it doesn’t address the fact that the Git commit ID plugin, and probably others, will still render the username and password in the URI.

Exclude the git.remote.origin.url property from your build

If you’re using the Git Commit ID Plugin, exclude the git.remote.origin.url property from your build:

<configuration> <excludeProperties> <excludeProperty>git.remote.origin.url</excludeProperty> </excludeProperties> </configuration>

This will completely remove the origin URI from the properties file. This can be annoying if you want to track where the code came from, which handy if you have more than one Git repo manager hosting the same code (i.e. you have a mirror repo that is local to one of your global offices). I have submitted PR #241 which attempts to strip out the password if it’s found in the URI.

Use Git Commit ID Plugin 2.2.1 or higher

Update: as of 3/26/2016, version 2.2.1 of the Git Commit ID Plugin 2.2.1 plugin was released which includes PR #241. And that PR fixes the issue altogether.

You must be logged in to post a comment.